Data Quality Services has come up with some interesting concepts.

1) Two main pillars of DQS concepts are - Knowledge Base and Domains.

2) In laymen terms I would consider KB as tables and domains as fields of those tables. Domains have datatypes, constraints, and other attributes just like fields of a table. Domains can also be hooked with reference data providers, to get enumeration of valid and possible values.

3) Composite domains are combination of one or more domains and makes up a complex combination, which is synonymous to calculated fields.

4) When data is passed against these domains, it acts like a regular expression match on the data that is matched against the domain.

5) After the data match against the domain, it applies fuzzy logic kind of algorithm similar to what SSIS Fuzzy Lookup transform. Based on this feature, concepts like "confidence" against the matched value and options to correct the data is offered.

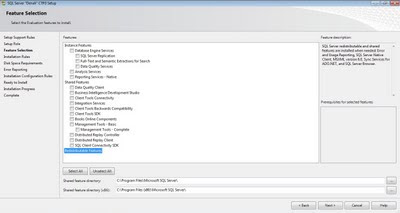

A tool packed with all these features is very useful for data stewards i.e. data quality analysts and operators, and that tool is Data Quality Client. From a higher level if you analyze these points, they all collectively fit in a way to function as a transform. DQS Cleansing Transform in a new transform introduced in SSIS Denali. A very informative article from SSIS Team demonstrating the use of this transform can be read from here. Another great informative article on DQS Cleansing Transform is available from DQS Team, which can be read from here.

Creation of knowledge base and domains is much like creating tables and fields, and if DQS provides an interface to import tables from source systems in the form of KB and domains just like Data Source View, it would make the process very easy and make the tool more friendlier. Honestly, I feel that organizations would have their knowledge embedded in the metadata of database objects. And creating that kind of KB and domains again is not a light weighted exercise. Let's see if such facilitation gets added to DQS in the time to come.

The bright side of DQS is that cleansing becomes more decoupled, powerful, and data profile task and data profile viewer would complement the capabilities of DQS as data profiling and data cleansing are closely associated processes. I would not be surprised if Data Profile Viewer and Data Profile Task gets dissolved into DQS and a new transform called DQS Profiling Transform gets added to SSIS toolbox in Data Flow along with DQS Cleansing Transform !!

Article

Article