In this recipe we would take a look at how to create a typical trellis chart and analyze data in a sophisticated manner. The beauty of this recipe is the ease of authoring this level of complex graph, without any report programming effort or knowledge. I will intentionally not explain a trellis chart right now, as the intention is to understand how the resultant graph helps in a deeper analysis, which in this case would be a trellis chart. Through the course of discussion, you will develop an understanding of how to develop trellis chart compared to a regular chart.

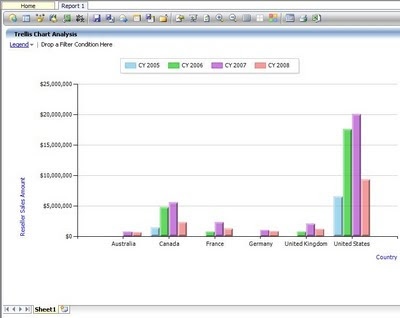

Using the AdventureWorks cube, I have created a very regular column chart, that displays the Reseller Sales Amount measure, for the Year attribute of date dimension sliced by the Country attribute of Geography dimension. As you can see in the below screenshot, many of you will notice that missing data for some years is not represented for some countries.

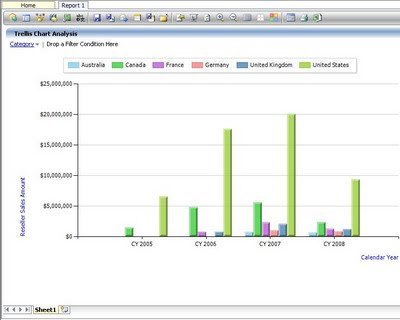

To analyze the graph in a better way, many would want to rotate the axes, and have the Year attribute on the Y-axis and slice by Countries. Ideally, reporting is mostly done on a reporting period like a financial year or calendar year. So after rotating the axis, we would get the below kind of graph. Rotating the axis is as easy as clicking a menu option in Analyzer for the Quick Chart component. After rotating the axis, the chart looks like the below screenshot:

Now comes the real challenge with the analysis part. If you look carefully, the first limitation with the chart is that one does not have data of the same country side by side for analysis. This analysis is done only in the mind of the user. If we rotate axis, we would get data of the same country side by side, but at the cost of not having the reporting period in sequence, which makes the chart further hard to analyze. From the above chart, as the data is of a modest size, this analysis might still be feasible from the chart, but in the case of a larger quantitative analysis, the problem would worsen.

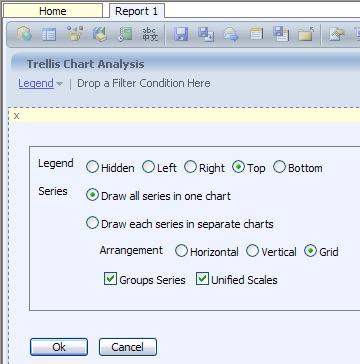

The solution to this problem is to have a pivotal view of data, with the representation of data still in a chart-based format, which is known as trellis chart. Achieving this functionality is just a matter of clicks using Analyzer. You can edit the settings of the chart to display all the series in different charts.

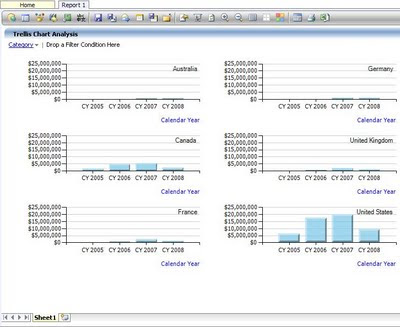

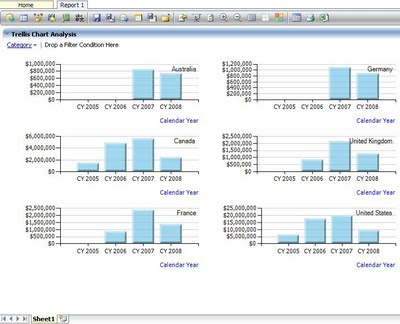

After selecting “Draw each series in separate charts” setting for the chart, the chart would take the shape as shown in the below screenshot.

Using this trellis chart representation, you can analyze data for the country individually in each chart for all the reporting periods, as well as compare data of one country with another country for each year or across the entire period just by analyzing the size of the bars, as the scale of each chart is exactly the same.

After this analysis, one might find that some countries performed quite low compared to others, but as the scale is too high, exact value of the reseller sales amount made in each year is not visible. For example, if you look at the chart of Germany you would be hardly able to make out the sales in CY 2007 and CY 2008. This is also a challenge with a regular column chart, that you cannot have individual scales for each countries / reporting periods, as the entire data is clubbed in one chart. But with the trellis chart we should have this flexibility, and as expected, Analyzer provides report authors with this flexibility too. If you edit the settings of the chart, and uncheck “Unified Scales” option as shown in the above screenshot, you would get the chart as shown in the below screenshot, which solves this issue.

With scales adjusted individually for each chart, you can analyze data for each country individually, and when you want to compare data across charts, it’s just a matter of unifying the scales.

Such report authoring would generally take two different reports involving programming efforts with many reporting platforms. But with Analyzer, once you are familiar with the report authoring interface, it’s just a matter of clicks. You can try out the evaluation version of Analyzer, to learn about more such amazing report authoring capabilities.

Article

Article