Sunday, October 31, 2010

SQL Azure Reporting versus SSRS : Challenges in cloud based reporting

I'm reading: SQL Azure Reporting versus SSRS : Challenges in cloud based reportingTweet this !SQL Azure Reporting is soon expected to be available on Azure platform, and as the name suggests, it is expected to cater reporting out of SQL Azure. A nice comparison of cloud based SQL Azure reporting and on-premises SQL Server Reporting Services can be read from this page.

Nice part about this cloud based reporting is that one can author report from cloud and host it on the cloud. But when authoring is done on the cloud, the only data source available would be SQL Azure. This makes the scope quite limited for the use of such reports. Earlier too, it was possible to create reports out of SQL Azure databases using SSRS, and one of my popular articles on how to implement the same can be read from here. Instead of being a PaaS / SaaS solution, I see it just as an extension to SQL Azure feature set, something similar to export data functionality. Support for reporting from on-premise data using WCF / RIA services would have made this reporting solution much admirable.

It's also being heard on a few blogs, that one can now wash away their hands from maintaining the reporting infrastructure as reporting is available on cloud, but there are a lot of questions before this statement. In my views, there must be some exceptional reasons to implement a reporting infrastructure for a cloud based database. Instead of it, the best solution is a PaaS / SaaS reporting solution.

Saturday, October 30, 2010

Windows Azure Market Place ( Data Market and App Market ) - Potential jobs for BI Tools

I'm reading: Windows Azure Market Place ( Data Market and App Market ) - Potential jobs for BI ToolsTweet this !Windows Azure Market Place is a cloud based service offering on the Azure platform from Microsoft . For those who are completely unaware of Azure, I can say that I find it synonymous to Amazon Web Services in the making. Services available or to-be available on this platform can be divided in two parts: Data Market and App Market.

Data Market which was formerly know as Microsoft Codename "Dallas" is now commercially available, and this announcement was made very recently. Also many other significant announcements for the Windows Azure Market Place, which can be read from here. App Market which is another section of services related to application development and hosting would be commercially released, probably early next year.

Data Market which was formerly know as Microsoft Codename "Dallas" is now commercially available, and this announcement was made very recently. Also many other significant announcements for the Windows Azure Market Place, which can be read from here. App Market which is another section of services related to application development and hosting would be commercially released, probably early next year.

My area of interest revolves around data market. Data Market being a platform of data / content providers is a potential future candidate to be considered as a vital data source on the cloud. Traditionally we were used to see data sources as only in-premise databases like SQL Server, Oracle, Teradata, DB2 and others. But with the advent of cloud, we also see SQL Azure as a data source today. In my views if this platform evolves considerably, there is a bright scope that this is the probable new data source on the cloud.

Also this opens up jobs for MS BI Tools, especially SSIS and SSRS. I definitely see a requirement to merge data from different data providers that one might have subscribed, and create reports which is facilitated by Excel / Excel Add-In presently, can be replaced by SSRS. Data access from this platform is facilitated through REST APIs or OData, and MS BI Tools lack this support. But by the time DataMarket gains adoption as well as maturity from partners and consumers, we can expect MS BI tools to provide support for the same. Powerpivot is already aligned by Microsoft to serve the data analysis needs. Interestingly Tableau which is one of the leaders in data analysis software with it's huge range of data visualizations, is one of the technology partners and is providing support for data analysis from DataMarket. Check out the announcement of integration between Tableau and DataMarket from here.

To have a first look at the DataMarket section of Windows Azure Market Place, check out this video.

Wednesday, October 27, 2010

Use of SQL Server 2008 R2 Express Edition with Microsoft and Non-Microsoft BI platforms

I'm reading: Use of SQL Server 2008 R2 Express Edition with Microsoft and Non-Microsoft BI platformsTweet this !SQL Server Express Edition is one of the most admired databases for BI platforms. Before a couple of years, I would not have been able to make this statement, but through the due course of experiences of my career, I can make this statement quite confidently today. Non only on microsoft platform, but non-microsoft based BI solutions are quite happy to include express edition as one of the constituents of a BI solution for either housekeeping tasks or to catalyze data / data processing. Some of the interesting use of express editions are listed below.

1) Proof Of Concept development: During the POC phase, projects are least likely to procure licenses. One of the first benefits of express edition is that it's license free for use in development as well as production, and does not come with a time bomb in it. As far as programmability features are concerned, it comes with almost all the features that an enterprise edition contains. So if the requirement is just a database container, express edition is one of the best starters on the menu.

2) Test data store: Instead of using resources on the server with higher editions of sql server, express edition can be used as a warehouse to store test data. When the development team requires test data during the development phase, they can always synchronize their local instances with test data from an express edition which can be treated as a test data server. This express instance can be handed over to a business analyst who would generally prefer to own a database free of complexity.

3) Metadata provider: Often many legacy systems or systems like SAP or other CRM systems emit out data in text / CSV files. Now these files many times contain non-printable characters or other junk characters, and the issue occurs during the import process. As a part of structural testing, legacy data files can be imported into express instance which can be installed at the source. Importing full / sample data into express edition can provide concrete metadata structure to the data (which does not exist in text / csv files) as well as test the import process. This can be done right at the source free of cost with express edition, making ETL processes and data migration more smooth. Many non-microsoft platforms would consider express edition as a tempting choice for purposes similar to these.

4) Temporary Staging: SQL Server 2008 R2 express edition has the database size limit of 10GB. This is a reasonable size for considering express instance as a temporary staging server in your ETL solution to stage master and/or transactional data.

5) BI Housekeeping: A production / UAT / QA server DBA would not allow external applications to connect to these servers. A project might want to retain a repository of execution and performance data history, and express edition is one of the best choice for such purposes. One can use any number of analysis / reporting tools on this edition without any security concerns.

Above mentioned are some of the uses of express edition in a BI solution development environment. 10GB DB size, SSMS, almost full DB programmability features, and license free production use are the major features of express edition. Keeping these features in view, you can consider SQL Server Express edition to economically catalyze your BI solution development.

Labels:

SQL Server 2005,

SQL Server 2008,

SQL Server 2008 R2

Monday, October 25, 2010

Data Source View versus View in Data Source for SSIS , SSAS and SSRS

I'm reading: Data Source View versus View in Data Source for SSIS , SSAS and SSRSTweet this !There is a vast difference between data source views and views in data source. We all recognize data source views i.e. DSV as one of the means to means to implement UDM. But I am of the opinion that when views in data source are not feasible to implement, one should resort to using DSVs. By views in data source, I mean that if you are using SQL Server as your OLTP data source, create views in SQL Server instead of creating lot of named queries / calculated columns using DSVs. Some of the advantages of using views in SQL Server for ETL / Cube Design / Reporting are as below:

1) Control and Filter over metadata: Mostly in SSIS a lazy implementation involves directly reading all the columns from a table, and tons of warnings in the progress tab as most of those columns are never used. With views you can always place a filter over the required number of columns, adjust data type casting as per requirement, and manipulate the metadata as required.

2) SQL advantage: With views in your database, you have entire SQL programming power at your disposal providing you the ability to create simple to most complex calculations. Also conditional logic, default values, null checks and many other validation and configuration can be placed at this level.

3) Adaptable to change: In case if the structure of your tables (fact / dimension) in your relational data warehouse change, or the structure of your OLTP source tables have changed, views can shield your ETL / Cube data source as well as save the solution from any kind of changes which would be required in case of DSV. Views can even help you to craft your snowflake schema into a star schema.

4) Transparency and Maintainability: If a new developer steps-in to maintain and enhance your legacy solution, swimming in your DSV to figure out the calculations can be a big challenge in itself. On the other side, analyzing a SQL select query can be much easy to figure out the calculations than analyzing each calculated column / named query in a DSV. Also a view can be used as a part of metadata / dependency analysis with SQL Server or other database analysis tools.

I do not intend to mean that DSVs are useless and views are the best. But a right balance between views in a data source and data source views (DSVs) can make your solution more robust and developer friendly than blindly using DSVs.

Friday, October 22, 2010

Web based OLAP client for SSAS : Report Portal

I'm reading: Web based OLAP client for SSAS : Report PortalTweet this !In my views, OWC (Office Web Components) available in SSMS is by no means a professional OLAP browser. Excel is one of the widely used client for browsing OLAP cubes, but there are some limitations with it when one wants to consider it as an enterprise client, some of which are listed below:

1) It needs to be installed on every client workstation

2) Data visualizations available are more suited for operational reporting than analytical reporting

3) There are almost no features for collaboration i.e. sharing generated reports with other users on a common platform unless you use Office Web Apps or Sharepoint

4) No spatial data support either in consumption or presentation with Bing Maps / Google Maps

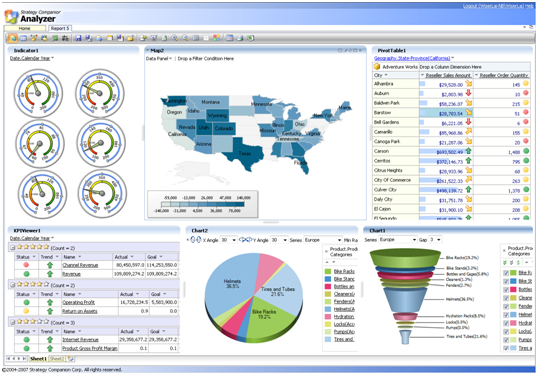

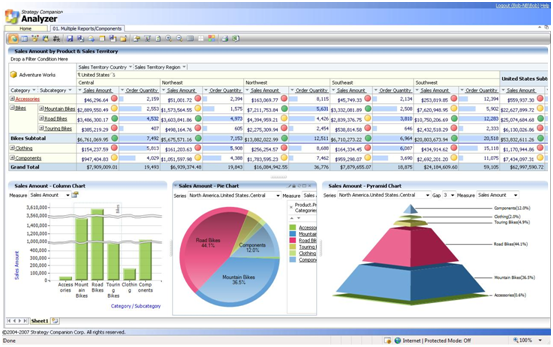

Actually Excel has the capability to use OLAP / SSAS cube as a data source, but that does not mean that this capability can be treated as the primary purpose of using Excel. In one of my posts, I mentioned about Analyzer which is a product from Strategy Companion. Recently I came across one another such product - Report Portal.

Zero footprint, SaaS, Cloud are some of the buzz words while selecting a technology / product for solution development, and Report Portal is also a zero footprint OLAP web client for SSAS. In my view, this product lacks that professional and sleek features that is expected from a web client. Documentation is quite poor, export formats of reports are limited, there is no mention of SaaS offering. But some of the noteworthy features of this product are as below:

1) Report portal has got very distinguished data visualizations, which are quite impressive from a data browsing point of view. You would not use these kind of data visualizations generally for reporting, but to develop analytical reports, these visualizations can be quite helpful for analysis. Check out the Pie-Chart Tree Report, which is one of the quite unique kind of data visualization for data analysis.

1) It needs to be installed on every client workstation

2) Data visualizations available are more suited for operational reporting than analytical reporting

3) There are almost no features for collaboration i.e. sharing generated reports with other users on a common platform unless you use Office Web Apps or Sharepoint

4) No spatial data support either in consumption or presentation with Bing Maps / Google Maps

Actually Excel has the capability to use OLAP / SSAS cube as a data source, but that does not mean that this capability can be treated as the primary purpose of using Excel. In one of my posts, I mentioned about Analyzer which is a product from Strategy Companion. Recently I came across one another such product - Report Portal.

Zero footprint, SaaS, Cloud are some of the buzz words while selecting a technology / product for solution development, and Report Portal is also a zero footprint OLAP web client for SSAS. In my view, this product lacks that professional and sleek features that is expected from a web client. Documentation is quite poor, export formats of reports are limited, there is no mention of SaaS offering. But some of the noteworthy features of this product are as below:

1) Report portal has got very distinguished data visualizations, which are quite impressive from a data browsing point of view. You would not use these kind of data visualizations generally for reporting, but to develop analytical reports, these visualizations can be quite helpful for analysis. Check out the Pie-Chart Tree Report, which is one of the quite unique kind of data visualization for data analysis.

2) Collaboration and organization of the analyzed data, which can be saved in the form of reports, is considerably mature.

3) Metadata can be browsed very efficiently, and this product makes a very extensive use of XML/A ( XML for Analysis ). The browsing features are quite friendly to take away the pain of forming MDX queries.

If you use it as a tool to save your OLAP analysis i.e. the results that you gathered while browsing or analyzing OLAP data, and want to stick it in the form of reports on a collaborative platform, it's a nice web client with zero footprint installation ( as it's a web based solution ). But if you are looking out to use as a full fledged self-service enterprise reporting solution out of your OLAP, I would feel much more comfortable and confident in Analyzer than Report Portal.

A wise strategic move can be to use Report Portal when you are taking your baby steps in your OLAP reporting i.e. the phase when you are developing your data marts / data warehouse. This product could well support your OLAP browsing needs efficiently as well as facilitate reporting at the same time, keeping the pressure of immediate reporting from your evolving data warehouse, at bay. When the solution is in the next level, you can choose a SaaS reporting solution like Analyzer and retire Report Portal. Please keep in view, this always depends upon scenarios and it's not a generalized strategy. The key difference between Analyzer and Report Portal is that Analyzer is a reporting solution, and Report portal is a web client and reporting is one of it's features and not a primary function.

3) Metadata can be browsed very efficiently, and this product makes a very extensive use of XML/A ( XML for Analysis ). The browsing features are quite friendly to take away the pain of forming MDX queries.

If you use it as a tool to save your OLAP analysis i.e. the results that you gathered while browsing or analyzing OLAP data, and want to stick it in the form of reports on a collaborative platform, it's a nice web client with zero footprint installation ( as it's a web based solution ). But if you are looking out to use as a full fledged self-service enterprise reporting solution out of your OLAP, I would feel much more comfortable and confident in Analyzer than Report Portal.

A wise strategic move can be to use Report Portal when you are taking your baby steps in your OLAP reporting i.e. the phase when you are developing your data marts / data warehouse. This product could well support your OLAP browsing needs efficiently as well as facilitate reporting at the same time, keeping the pressure of immediate reporting from your evolving data warehouse, at bay. When the solution is in the next level, you can choose a SaaS reporting solution like Analyzer and retire Report Portal. Please keep in view, this always depends upon scenarios and it's not a generalized strategy. The key difference between Analyzer and Report Portal is that Analyzer is a reporting solution, and Report portal is a web client and reporting is one of it's features and not a primary function.

Monday, October 18, 2010

StreamInsight Tutorial , StreamInsight Video Tutorial , StreamInsight Webcasts , StreamInsight Labs and StreamInsight Presentations

I'm reading: StreamInsight Tutorial , StreamInsight Video Tutorial , StreamInsight Webcasts , StreamInsight Labs and StreamInsight PresentationsTweet this !In my views, StreamInsight is one of the most undiscovered enhancements of SQL Server 2008 R2. StreamInsight is a development platform for targeting complex event processing (CEP), and though this is newly introduced in SQL Server 2008 R2, but CEP is not a new market. CEP is a very well know term in the BI parlance and there are already other players in this game. Some of the reasons for limited awareness / popularity of StreamInsight is that it involves a bit of .NET programming ( LINQ ), applications of this feature is limited and this is not something that masses would use frequently unlike other database development features or business intelligence related features. Also in my present knowledge there is no book available that is dedicated to StreamInsight.

In such cases when reference material available is scarce; articles on blogs and community websites, webcasts, technical presentation by experts, and video recordings of demonstrations are a very effective and economic way to fill up this reference material gap. Nick MacKechnie has shared an exhaustive resource collection of StreamInsight related webcasts, videos, presentations, hands on labs and demos. Though you might not be interested immediately to get your hands-on StreamInsight, but this page is definitely worthy of a place in your bookmarks section. Check out this StreamInsight resource collection from here.

Labels:

Downloads,

SQL Server 2008 R2,

StreamInsight

Friday, October 15, 2010

MS BI Interview questions book covering SSRS SSIS SSAS interview questions and answers

I'm reading: MS BI Interview questions book covering SSRS SSIS SSAS interview questions and answersTweet this !Recently one of my blog readers asked me a query, whether I know of any book that provides interview questions and answers related to SSIS , SSAS and SSRS i.e MS BI related topics. Candidate who posted this question has/had prepared for the interview, but wanted such book to gain confidence for appearing interviews. I felt that I would discuss this topic in the form of a post, rather than replying in the form of a comment, as I find many of my blog readers sending me queries through email or in the form of comment, specifically for interview related topics.

Firstly, to answer this question straight, there is no book dedicated to MS BI interview questions. Now the question comes that how to get a mock interview session or how to evaluate oneself to test whether you are ready for a MS BI interview. Here are a few steps that should help you prepare for the same.

1) Bank on your area of expertise : As I always say, no interview is a generalized interview. You need to be very thorough in your area of expertise and prepare in accordance to the role that you are targeting. If you are appearing as an MDX / Cube developer, preparing thoroughly in T-SQL won't add much value. Even if you score passing marks in that area, that won't impact your selection as your interviewer should be sensible enough to understand that you would rarely work in that area. So instead of concentrating your energies in all the areas (unless you are targeting an architect / MS BI Tech Lead role), concentrate your energy on your area of strength.

2) Mock Test / Practice Test questions : Many certification exams books like Self Paced Training Kit , Exam Cram and others come with a lot of practice questions (approx min 300 questions) which are scenario based. For example, self paced training kit book from microsoft press comes with a practice test software and lots of practice questions in each area of MS BI. And when you appear this practice exams, based on your performance, it would also recommend the areas where you need improvement. One additional benefit of such tests is that your vocabulary (theoretical knowledge) would improve a lot.

3) Theory of Top 5 : One more theory that you can use is pick up top 5 hardest questions in SSIS , SSAS and SSRS. Start with it and try to figure out the maximum questions you can ask from the start-up question. For ex, "What is an Execution Tree in SSIS" and from this question I can ask another question "How can I find number of trees in my package" , "What are blocking and non-blocking transformations" etc. If you are able to score 70% in all these areas, you have a great probability of crossing the interview. In summary, think like an interviewer to appear for an interview.

4) Community Service : Confused, how this is going to help for interview preparation / gaining confidence to appear for an MS BI Interview ? By community service I mean, join MSDN SSIS / SSAS / SSRS forums and spend around a month addressing various questions. You would generally get a feel of how good are you at addressing regular technical issues.

5) Prepare your Notes : The moment you start preparing for your interview, keep collecting your questions. After spending around a month or two and following the above 4 steps, you would have collected decently large number of questions instead of wandering from website to website for interview questions.

I am of the opinion that create your own book, instead of depending or searching for books related to interview questions. I have not appeared for any job interviews from the past 3.5 years, but when I would start looking out for a change, I would definitely practice this theory :-)

Thursday, October 14, 2010

How to apply Conditional Split in a SSIS package without using Conditional Split transform

I'm reading: How to apply Conditional Split in a SSIS package without using Conditional Split transformTweet this !Conditional Split is a very simple and effective transform, and is one of the most commonly used transforms in a SSIS package. But those who do not pay attention to detail or those who are do not work regularly / extensively in SSIS, often miss the detail that once a record matches a criteria, it's moved to the first matching output path. So each output path has exclusive records, and no repeated records.

Many times we come across a situation where we have records that are matching more than one criteria and we need such records in more than one output paths. Generally we take help of multicast transforms in such scenarios, but that is not an efficient design, and definitely such design won't scale. When the question / task comes of splitting data, right from the schools / parlance of SSIS, we are used to answer / use "Conditional Split" Transform. But we know the limitation of this transform for the scenario in question and design issues with Multicast transform. So what is the way to conditionally split data in an SSIS package without the help of this transform ?

Today one of my article got published and it's titled " Intelligent Conditional Split in an SSIS package "; it is aimed to present a solution at this issue, and the same can be read from here. The origins of this technique is derived from the niche art of custom SSIS components / transforms development methodology. If this solution is wrapped as a custom component, it can be used very well as an Intelligent Conditional Split component. To avoid ruining the value of my article, I would limit my explanation and would let you check out the original article. Feel free to share your opinion about this article.

Tuesday, October 12, 2010

MS BI POC and SQL Azure POC for better ROI on future projects

I'm reading: MS BI POC and SQL Azure POC for better ROI on future projectsTweet this !In my views, POCs ( Proof Of Concepts ) is a very versatile way of creating and promoting business, especially for vendors and solution providers. There was a very interesting article on POCs on BeyeNetwork some days back, that talked about how decisions based on limited scoped POCs can be catastrophic for an enterprise.

If POCs are carried out with a viewpoint of extracting results that can be translated into patterns / benchmarks that can catalyze agile and/or economic BI solution development methodology as well as provide avenues for appliance / product development, such POCs have a very high probability of an assured Return On Investment (ROI) against the investments done for conducting such POCs. For example, MDX is quite a complex query language, and developers need time and experience to master the same. If templates or CLR class libraries are created using ADOMD.Net and MDX, same can be reused via template explorer in SSMS and .Net application development, which can effectively boost up the development process and reduce the learning curve. This is very similar to DAX available in Powerpivot. Also if such libraries are developed with a broad vision in mind, it can be promoted as a off-the-shelf component. And I am sure that the ROI would be exponentially more compared to investments.

Below is a brief list of few such POCs, which has the potential to provide a very high ROI, provided it's carried out with a vision and target in mind.

1) SaaS solution development methodology using MS BI Stack and SQL Azure

2) Pattern development for synchronizing in-premise SQL Server and SQL Azure

3) Plugging / Integrating Cloud gateway tools at different phases of BI lifecycle

4) Economic BI solution development using SQL Azure / SQL Express , SSRS and Sharepoint Foundation 2010

5) MDX Script library in compiled / script format

6) Pattern development for economic cloud database (SQL Azure) consumption in BI and application development projects

7) Pattern development for Inter cloud integration and multi cloud incorporation into MS BI projects for provider-dependency free cloud consumption in MS BI projects.

I am not claiming that these are the best ones, but I can say that these are the best ones in my mind.

Friday, October 08, 2010

Connection error in SQL Azure

I'm reading: Connection error in SQL AzureTweet this !

Some of the major reasons for connection issues in SQL Azure are as follows:

- Lock Consumption

- Log File Size

- Uncommitted Transactions

- Transactions blocking system calls

- TempDB Usage and Size

- Excessive Memory Usage

- Maximum Database Size

- Transaction Termination

- Denial of Service Attacks

- Network Problems

- Failover Problems

Confused / Curious / Impressed with the above points ? Well, I cannot take credit for what I have summarized from a SQL Azure whitepaper. Same can be read from here. If not the entire whitepaper, at least the above list can be quite handy while troubleshooting for the root cause of connection issues with SQL Azure. Also with little modification, this list can be translated into development standards for application interfaces to connect with SQL Azure.

Monday, October 04, 2010

Self service enterprise reporting solution for data warehouse powered by SSAS

I'm reading: Self service enterprise reporting solution for data warehouse powered by SSASTweet this !In a typical Microsoft solution involving MS BI and .NET, after the data warehouse development generally a BI development team hands over the next lap of development to the application development team. BI team and/or App team remains responsible for developing reports that are hosted within the proprietary .NET application and/or Sharepoint portal. The disadvantage is that, it takes away the self-service feature from the business users. When the requirement is "race against time", i.e. when one needs reporting solution asap which can serve enterprise class BI reporting on the top of your data warehouse or cubes, the selection moves towards ready to use reporting solution that can sit on the top of your BI artifacts.

One such solution is a product from Strategy Companion known as Analyzer. This reporting solution is said to be developed using ASP.NET and DHTML. Some of the features that I found pretty regular in line with what other such reporting products might offer are reporting using KPIs and indicators, drag-and-drop report creation, security integration with LDAP and Kerberos, different visualizations and gauges, integration with IE, SSRS, PPS, Sharepoint, Excel. But some of the features are quite impressive which any project would desire, and they are as follows:

1) Zero foot-print installation: Thin client is one of the most sought after requirements for any kind of enterprise wide client access. If you can facilitate your analytics requirement just using a browser, and still facilitate drag-and-drop report creation which for self-service report creation with rich report development features, it's very much favorable for any business.

2) Software as a Service solution: No business would want to invest straight away for a full fledged enterprise wide license, just based on an evaluation version, until and unless the product is a premiere class product listed in Gartner's report. Also small and medium businesses might want such reporting in an on-demand fashion. Analyzer provides the option for an SaaS deployment too.

3) Logic as a service: This is not a standard term, it's just my synonym for the term webservice. Analyzer features can also be used in the form of webservices, to embed reporting functionality in existing applications. This is a nice to have feature, but in my opinion, I would not recommend for this option. Instead there are better options than this, but still if you have deployed Analyzer for your enterprise wide BI reporting requirements, and some proprietary applications might be getting left out, this option can help in such cases.

4) Support for load-balancer / clusters: Analyzer can be deployed on IIS that is deployed on multiple servers and managed by a load balancer.

One such solution is a product from Strategy Companion known as Analyzer. This reporting solution is said to be developed using ASP.NET and DHTML. Some of the features that I found pretty regular in line with what other such reporting products might offer are reporting using KPIs and indicators, drag-and-drop report creation, security integration with LDAP and Kerberos, different visualizations and gauges, integration with IE, SSRS, PPS, Sharepoint, Excel. But some of the features are quite impressive which any project would desire, and they are as follows:

1) Zero foot-print installation: Thin client is one of the most sought after requirements for any kind of enterprise wide client access. If you can facilitate your analytics requirement just using a browser, and still facilitate drag-and-drop report creation which for self-service report creation with rich report development features, it's very much favorable for any business.

2) Software as a Service solution: No business would want to invest straight away for a full fledged enterprise wide license, just based on an evaluation version, until and unless the product is a premiere class product listed in Gartner's report. Also small and medium businesses might want such reporting in an on-demand fashion. Analyzer provides the option for an SaaS deployment too.

3) Logic as a service: This is not a standard term, it's just my synonym for the term webservice. Analyzer features can also be used in the form of webservices, to embed reporting functionality in existing applications. This is a nice to have feature, but in my opinion, I would not recommend for this option. Instead there are better options than this, but still if you have deployed Analyzer for your enterprise wide BI reporting requirements, and some proprietary applications might be getting left out, this option can help in such cases.

4) Support for load-balancer / clusters: Analyzer can be deployed on IIS that is deployed on multiple servers and managed by a load balancer.

If I am given the requirement of agile DW development and facilitating reporting on the top of my MS BI artifacts, and provided that neither I do have enough funds to invest into ramping up a team to carry out a full fledged development life cycle nor I have time to wait for the availability of such solution, I would opt for tools driven DW and BI Reporting development. For the same I would pick up products like WhereScape RED for agile DW development and Strategy Companion's Analyzer for BI reporting. I do not intend to say that these are the best options, but these are definitely the two prominent ones.

Subscribe to:

Posts (Atom)

Article

Article