Wednesday, April 27, 2011

MS BI Infrastructure Architect - Developing readiness for the role and responsibilities

I'm reading: MS BI Infrastructure Architect - Developing readiness for the role and responsibilitiesTweet this !Architect is a very appealing word to all the aspiring technical minds, but most people do not realize that architect is an adjective-free role. Have you ever heard terms like SSIS Architect, SSAS Architect, SSRS Architect, PPS Architect etc.. ? If yes, then I would say that Architect word has been loosely used instead of the term SME.

The first difference between developers and architects in my vision is the broadness of domain. Most developers would stick to a technology instead of a platform, whereas this ideology does not suit the JD for an architect. If you have the ideology that "I have worked with SQL Server for 5 - 8 yrs, I am good at T-SQL programming, SSIS, SSRS and have theoretical idea of DW. And if you ask me what is Sharepoint, .Net, Webservices, Cloud, Infrastructure, Data Modeling, etc.. this is not my domain.", I would stamp "Biased MS BI Developer" on your CV. Architect requires changing many hats like Technical Architect, Data Architect, Solution Architect etc, and being an Infrastructure Architect is one such hat. If you are an Architect, many a times you would find yourself in a role where you are the Infra Architect + Data Architect + Application Architect + Solution Architect, and you might be given few technology specific SMEs for consulting. I have been in such situations as I had experiences with all these individual roles as a tech lead through the course of my career. Whatever I am sharing is based on my experiences.

When a solution encompassing application development technologies and MS BI technologies are promoted from environment to environment i.e. from dev -> staging -> APT -> Prod, this requires infrastructure estimation, capacity planning, software configuration, server connection topology etc before the environments are built. If you think that to setup such environment, one can just procure servers, add memory and rig the systems, probably you must be setting up infrastructure for solutions of very modest size. If you are developing solutions for an enterprise class client, there is a high probability that there would be a Data Center with shared application environments where your solution would be hosted.

Here comes the first lesson as well as challenge for the Infra Architect. Virtualization is the SQL of Infrastructure capacity planning. You would have to deal with infrastructure teams, who would discuss, advise and challenge your estimations and talk about technologies like Hyper-V, VCPUs, RAM, Ports and Protocols. You might be using MS BI Stack, Sharepoint and .NET Stack, Microsoft System Center and each of these would have different connectivity and hardware requirements. At a minimum you should know what MS BI stack needs in terms of infrastructure design. Ideally in a virtualization environment, development servers run on 4 VCPU, 4 - 8 GB RAM and Production servers of modest size run on 8 VCPU and approx 16 GB RAM. If you are not aware of what is a core, vcpu, ports etc you should start developing an understanding of the same.

The next major challenge you would be faced with is memory capacity planning, this mostly depends upon data and load. Application Performance Testing environments would be setup to test performance, and you should learn how to interpret the results from those environments. Testing teams would be using Load Runner kind of tools to perform a load testing, and you would be getting regular reports containing performance counters, concurrent users, memory utilization, CPU utilization etc. This is second area where would act as your profiler.

The final major challenge is allocation right amount of memory for different aspects of the solution. For example, if you have SQL Server and MS BI technologies, you need to allocate memory for logs, backups, installation, data etc. Based on this calculation you need to estimate total memory requirements and also setup designs for hosting application environments on the planned infrastructure.

You would not be practically building servers and installing softwares. In an enterprise class IT environment, there are dedicated teams for the same, but the order to march forward comes from the architect of the solution and not the architect of infrastructure teams. So at the minimum you have to create a technical architecture diagrams from infra setup to communicate your design and estimation. Being in such a role is a challenge, and fortunately or unfortunately I have been in such role and had learned a lot from the same. I hope this post brings some vision to professionals prone to such challenges. If you need to borrow my experience, feel free to drop me an email.

Sunday, April 24, 2011

When should you use thrid-party MS BI tools and utilities ?

I'm reading: When should you use thrid-party MS BI tools and utilities ?Tweet this !Most companies would not entertain use of third-party tools and utilities, for the obvious reasons of cost, developer expertise and corporate policies. But it is not that tools should not be used at all, it's just that there should be correct reasons when you should consider the options of using freeware or third-party licensed tools and utilities. Below are some of the reasons in my viewpoint when usage of tools can be justified and considered.

1) Shortage of skills: As an architect or tech lead or team lead or dev lead, you would always want to have "The A Team" for your project. But this is hardly the case if real-life situations. Most IT companies who serve their clients as services / solution provider, houses thousands of employees and it's always a mix of knowledge workers with all the different level of proficiencies in skill sets. For example, for your reporting project where you plan to use SSRS, you might get a Crystal Reports developer who have been trained in SSRS for a week. You might be in a situation that you need to start development instantly and you would have to live with such team composition. At such times, tools can make your life easier and take care of many things that you would not get out-of-box.

2) Time to market: Say you are having a product development project in-house or for a client, more the time you take to release the product in production, more is the loss of business. Developing components in-house is always possible provided one has the luxury of time, but in real-life you would find such projects very rare where you have such luxury. In such cases using third-party products can be a huge catalyst and in many cases it would be the only option. Say you need an ETL component that reads data from Amazon EC2 EBS data store, connect to EBay web services and makes a transaction. Developing such component can take a significantly long time, and third-party components which does the same can be your silver bullet in this case.

3) Development Accelerators: In medium sized companies or in the in-house IT department of an organization, IT staff would be very limited. You might not be in the need of any specialized component that is available only from third-party market, but the set of artifacts that you need to develop would be such huge that it would take lot of hands to complete and manage the task within a given time frame. For example, you intend to create a data warehouse end-to-end solution where you need DW, schema, ETLs, Data Marts, Stored Procs in OLTP etc. All these are regular development artifacts, but if I ask that the data warehouse needs to be delivered in 15 days, however modest the complexity of the solution may be, a modest staff would not be able to complete it. You need a reasonably large team size with a lot of experienced hands. In case you do not have this option, third-party tools can be your rescue boat.

4) Value Addition: For this category, most companies would not entertain tools unless and until they are either free or the technology / product that has been used is seriously paralyzed and some oxygen is required to survive the same. Freewares from community portals like Codeplex can be a huge value addition even if the technologies you are using are up to date. For example BIDS Helper is one of the highly recommended add-on to BIDS, and practitioners know the real value of the same.

I would love to hear your viewpoint on this topic. Feel free to comment on this post or email me your viewpoint.

Sunday, April 17, 2011

Planning MS BI project / solution deployment

I'm reading: Planning MS BI project / solution deploymentTweet this !In my views, "Failing to Plan means Planning to Fail". Most project managers and technical leads undervalue the importance of deployment in the solution development life cycle. Deployment planning is often considered even out of solution development perspective, and the complexity of this process is only realized when the deployment sits on the hot seat. In any professional IT products and services providing organization, you would find regular environments like Development, Staging (UAT), APT (Application Performance Testing) and Production. Each of these environments can have different corporate policies that implies a set of security and access methodologies in these environments. So if you have not planned your deployment strategy, each environment can compel you to make changes to your development.

A typical MS BI solution would consist of technologies like Microsoft .NET, SQL Server - SSIS, SSAS, SSRS, MOSS with/without enterprise services, Microsoft System Center, Team Foundation Server, Microsoft Office among the major application and database components. Below are some of the points to keep in view, which can have a huge impact on the overall solution development, and ignoring these points generally would emerge as a show-stopper to develop a generic build and deployment package.

1) Server and Instance names: Generally to make speedy progress development in development systems, often developers use hard-coded database names / instance names / server names. Different environments can have different server and instance names.

2) Service Accounts: Initially when development machines are setup for the team, developers tend to use whatever account has the best privileges or their own account. This would immediately fail in environments where a separate team would be deploying your build and there would be a separate dedicated service account created for an application as per the corporate policies. Also in many secure production environments, network admins keep on changing service account password at regular intervals. So if you are using user id as well as password to access web services and you have not programmed your services to pick up credentials from a configuration store, you just hit another show stopper.

3) Software Configuration: MOSS and .NET development can require creating many proxies and web / service references, which are mostly sourced or build in the form of DLLs. These can make your solution dependent on certain libraries, which are hard to detect as the development would federated across various development machines. This creates a discrepancy in software configuration. Again certain developers might be using different IDEs with add-ons that would dump external DLLs, for example AJAX Toolkit with VS 2008 and Sharepoint Designer. This needs to be carefully planned, as your server environment might not contain all these libraries.

4) Security Configuration: This is one of the most complex bit. Different technologies talking to each other would be communicating mostly through Windows Integrated Authentication or Active Directory Federation Services ( Windows Identity Foundation / Single Sign-On) configured with a claims / token based mechanism. It is quite possible that development environments can have different security configuration than other environments. During development this goes easily out of focus and the prize to pay for this is very heavy afterwards.

Site Collections / Web applications created on MOSS would be using HTTP / HTTPS with certificates which would need to be installed and configured on the site. Corporate policy might not allow you to copy certificates across servers, and this may come to light only when you might want to deploy your solution and infrastructure teams would raise a red flag for certificates.

I have been personally evident of a scenario where different developers developed their SSIS packages with ProtectionLevel property of the package set to EncryptSensitiveWithUserKey. Developers keep joining and leaving the team and they log on to systems using their Active Directory IDs. When the solution was deployed on a different environment, the solution started failing. Now these packages can be fixed only using the credentials of the users who developed them, and this iceberg almost sank the ship.

5) Automation: Many things are done manually like executing DB Scripts, deploying SSRS reports using BIDS, deploying MOSS site using Sharepoint Designer. You would find yourself with a new development cycle, when infrastructure teams responsible for staging and production environments would refrain you from access to these environments.

6) Shared environments: Solutions that are equipped with exclusive servers are quite lucky and also are generally first of their breed or mission critical. In any organization that is having an infrastructure setup in data centres for their IT Services would have shared environment for different application services like SSRS Reporting Servers, MOSS Application Servers etc. One may overlook this detail considering that fact that the same version of the service is used. But at certain levels overlooking key piece of information can be very heavy, for example, one may change web.config file which is the configuration file for entire server. Shared servers would be catering an array of applications, so when you deploy your solution you would not have the luxury of changing these files. At this point again your solution would fail.

Planning your infrastructure followed by deployment can provide insight into many restrictions and dependencies that you may discover very late. And such discoveries can either break your solution or invite a huge effort to develop your deployment setup. Remember one thing, "Failing to Plan means Planning to Fail" !!

Wednesday, April 13, 2011

Excel Services Technical Evaluation : Considerations for using Excel Services in your BI solution

I'm reading: Excel Services Technical Evaluation : Considerations for using Excel Services in your BI solutionTweet this !Technology architecture is one of the foundation for evaluating whether it would be the right choice to cater requirements of your solution. A general human characteristic is that "perception becomes reality". Flashy graphics, elegant color, super speedy slice and dice are such bullets that most business users cannot dodge, but here is where architects need to pitch-in to find the how does the entire machinery work i.e. architecture of the product. Below mentioned are certain characteristics of Excel services which one should keep in view while technical evaluation.

1) Excel services is an enterprise edition only feature in MOSS 2007 and MOSS 2010. So if you are planning to use lower editions of MOSS, this feature won't be available.

2) MOSS 2010 has REST API to support excel services which helps to bring selective data at client side. This API is not available in MOSS 2007.

3) Ability to query data from relational tables and display it in a tabular format is not supported out-of-box. With UDFs this is possible, but it would require custom .net programming, deploying the same on sharepoint. You define the functions when you author the workbook, but you see effect only when the workbook is accessed inside the excel web access web part on the moss site on which excel services is activated.

4) Standard connection methodology to connect to data sources is using ODC files stored in data connection libraries which would be configured as trusted for excel services. Dynamic parametrized queries are not supported at all in excel services. The only way to achieve a flavor of it is by using UDFs only.

5) Fetching data in a pivot table from OLAP data sources is well supported out-of-box. The report that you built out of this data can work smoothly if you have filters fields within the workbook itself. If your filter values are flowing outside your excel workbook, you would be required to use filter web parts.

6) Many features of excel which are frequently used by excel users are not supported, for ex. freeze panes, external pictures etc. So do not be in the impression that excel services is a full fledged excel on sharepoint.

7) If you intend to show data with user specific authorization, you need to contain all the data in the excel workbook that you would host on sharepoint, which in turn would be displayed to users from excel web access web part. Passing parameters to data sources from the client side through excel workbooks is not a straight-through process.

8) You can filter the data visible to end users in the workbook, but if the user chooses to edit the workbook by downloading it locally, the entire data is available to users.

9) You can define parameters in excel workbooks when they are published to sharepoint. The same parameter values can be fed in by users, and the entire report (i.e. data in the workbook) can be programmed using simple excel formulas to respond to the parameter values.

10) Excel is used as the authoring mechanism for excel workbooks and this workbook acts as the template for the data that would be hosted in this workbook from data sources. Conditional formatting is very well supported, but for any web based formatting would require using JQuery on the sharepoint page where excel web access web part would be hosted.

Few helpful links to get started with excel services:

- http://technet.microsoft.com/en-us/library/cc262899(office.12).aspx#subsection8

- http://msdn.microsoft.com/en-us/library/aa973804(v=office.12).aspx#Y2512

- http://blogs.msdn.com/b/cumgranosalis/archive/2006/03/24/excelserviceshelloworld.aspx

- http://blogs.office.com/b/microsoft-excel/archive/2008/10/15/using-office-data-connection-files-odc-and-the-dataconnections-web-part-in-sharepoint-to-specify-external-data-connections-in-newly-created-excel-workbooks.aspx

- http://office.microsoft.com/en-us/sharepoint-server-help/connect-filter-web-parts-to-excel-web-access-HA010105470.aspx?CTT=5&origin=HA010105476

Summary: My conclusion for excel services is that it's a suitable candidate when you need excel like capabilities over static data. It's a nice candidate for what-if analysis. If you intend to use it in the way you use SSRS with OLTP sources, Excel Services is definitely NOT the right choice. It's a good candidate for context-sensitive non-parameterized data, which is mostly the nature of data in OLAP sources having structures like dimensions and hierarchies. It's definitely not the right choice where you need to display data dynamically, real-time, and based on conditional parameterized querying mechanism.

Saturday, April 09, 2011

Infrastructure design of a Microsoft Business Intelligence solution

I'm reading: Infrastructure design of a Microsoft Business Intelligence solutionTweet this !In the job description of a Technical Architect, one often finds few items that architects might want to avoid getting involved. This is about fitting nuts, bolts and wires to rig different servers and components. Yes, you guessed right, it's designing the infrastructure and connecting all server and components at the physical layer. Personally I do not enjoy this task a lot, but still it drops on me as a part of my job. The major challenge in this task remains to keep a track of what ports are supported by corporate policies on which servers. Again different server products talk on certain default and other supported ports. It is very easy to get lost in this spider web of connections. Again on the top of these port configurations, there are protocols like HTTP, TCP etc, firewalls and federation servers for single-signon. It always helps to have a handy reference checklist of these port and protocols that make different servers and server technologies talk to each other.

In any typical Technical Architecture document, at a minimum, you specify details on how developers would connect to your application server which would in-turn connect to DB server, and these details without fail would need ports and protocols related details. This can grow as complex as the number of servers and components you have in your solution design. Hardware estimation and sizing is one other such area, but you might find sizing tools for the same which can provide an estimate based on the projections you provide for your target environment. Below are two such diagrams from a Sharepoint perspective which gives an overview of what kind of details you would require for an infra design perspective to rig your solution.

In any typical Technical Architecture document, at a minimum, you specify details on how developers would connect to your application server which would in-turn connect to DB server, and these details without fail would need ports and protocols related details. This can grow as complex as the number of servers and components you have in your solution design. Hardware estimation and sizing is one other such area, but you might find sizing tools for the same which can provide an estimate based on the projections you provide for your target environment. Below are two such diagrams from a Sharepoint perspective which gives an overview of what kind of details you would require for an infra design perspective to rig your solution.

Image Courtsey: MSMVPS.com

Wednesday, April 06, 2011

Developing an operational report that looks pre-analyzed using Analyzer

I'm reading: Developing an operational report that looks pre-analyzed using AnalyzerTweet this !Reports can be classified in two types from a higher level: Operational and Analytical. These two types of reports differ in the ways they represent information and the purposes they serve. Before we start with the Analyzer recipe that we would discuss in this article, let's try to understand the gap that exists between operational and analytical reports and then we will discuss how Analyzer can help to bridge this gap with minimal effort.

Business decisions are basically long-term, short-term or day-to-day in terms of scope. Operational reports are mostly used to cater to day-to-day operations. Considering this scope, the amount of data in an operational report is detailed. It is easy to get carried away with the assumption that operational reports have data from OLTP systems and the scope of data in terms of time scale is daily, weekly or of similar duration. But in my opinion this is a myth, and I am sure that if you ask seasoned reporting practitioners, they would agree with me. The way data is represented on a report mostly determines the utilization value of a report as an operational report / analytical report.

Generally for an analytical report, cubes are used as a data source and the report is mostly represented as a dashboard. One common issue that most poorly designed dashboards suffer is presenting too much information on the dashboard with highly detailed gauges. If a report can't serve the very purpose of an analytical report, which is to represent data in a way that requires minimum manual analysis of the details, while still providing a great amount of analytical value, even if it sources data from a cube it would be used as an operational report and not an analytical report.

Now the question comes up about how to bridge the gap between an operational report and analytical report? The answer for the same is appropriate data representation. Let's try to create an example using Analyzer, where we would try to give an analytical flavor to a report that looks operational, and sources data from a cube.

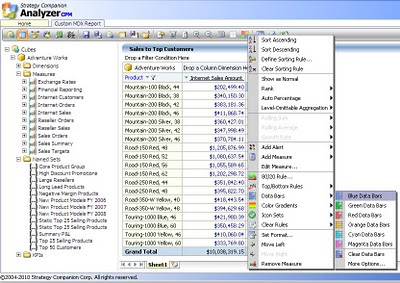

As you see in the below screenshot, I have created a basic report showing "Internet Sales Amount" and "Reseller Sales Amount" for a few top selling products. For this report the data is sourced from the Adventure Works cube in a Pivot Table. In order to compare the sales value for different products in each field, one needs to manually analyze the weight. A weight representation of each value in its respective field would make the report easier to analyze. In the below screenshot, you can see that Analyzer provides out-of-box capabilities to add colored data bars. One thing to note is that such features are not available out-of-box in SSRS, and only in Excel services.

In this report we have added just two ready measures that are available in the cube. But many a time reporting requirements can arise, where we need calculated measures. It can be the case that data warehouses and data marts are governed by other teams, and one might not have the liberty to create these calculated measures in cubes. Also, creating such calculated measures would require a separate development as well as process cycle altogether. OLAP development teams are composed of skilled MDX developers who can develop MDX on-the-fly, whereas report developers are mostly novice in this area. In our example, say we need a simple calculated measure which calculates Internet Sales + Reseller Sales. Fortunately, Analyzer provides a very advanced level though easy to use, MDX building and editing interface.

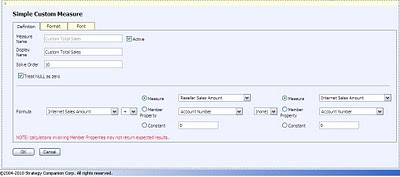

The screenshot that you see below has been activated by right-clicking on the field and selecting "Manage Measures". Here in this screenshot I have defined a calculated measure named "Custom Total Sales", where the formula is to add "Internet Sales Amount" to "Reseller Sales Amount".

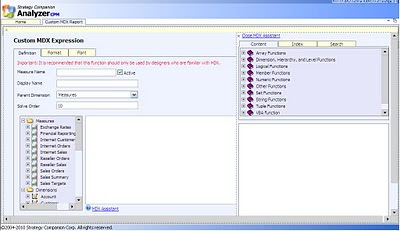

In case you have MDX skills at your disposal and you feel the need to define more complex MDX, Analyzer provides a full-fledged interface to define the MDX of your choice, as shown in the below screenshot. Also you get a help system known as "MDX Assistant” that helps you with different examples for almost all of the MDX programming constructs. This is a very valuable resource, as you do not need to sit with a book by your side or google around, when you are developing or experimenting with a language as complex as MDX.

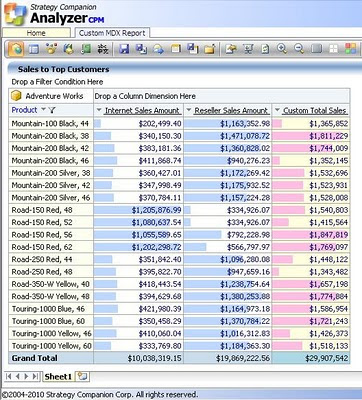

Finally when our custom measure is added to the report, add the colored data bars to all three fields. I have added a blue data bar to both built-in measures, and pink data bar to our custom measure. Just take a look at the below screenshot, and I leave the rest to the imagination of the reader, on how quickly what can be analyzed from this report. We can add lot more details, gauges, indicators, KPIs and other elements to this report. But the intention is not to create a full-fledged dashboard, the intention is to make the report quickly analyzable without trimming the details that an operational report generally contains.

If you want to check out more such features and options that Analyzer provides out-of-box to enrich a plain table report to a report that looks pre-analyzed, try downloading an evaluation version of Analyzer from here. Also try to give a thought on what efforts would be required to develop the report in the above screenshot from the report we had in the first screenshot, using SSRS.

Saturday, April 02, 2011

Load testing / Performance testing SSAS for evaluating scalability

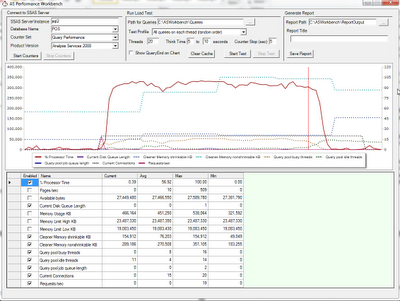

I'm reading: Load testing / Performance testing SSAS for evaluating scalabilityTweet this !APT (Application Performance Testing) is one of the standard environments, among the different environments that are set up in any typical SDLC. Results from APT environment are considered as direct indicator of the capability of any solution to scale. Load Runner is one of the most successful and widely used tools in this environment. Use cases are provided to testing teams, steps for each use case are executed and scripts are recorded, and the same scenario is repeated for a targeted number of users. This creates a simulation of a predefined number of users accessing the solution concurrently using most probably use case scenarios. The results from these tests provide direct insight into scalability, concurrency and other aspects of the solution.

Load Runner though a very useful tool, is more suited to be used by testing professionals. And it's also not a free tool. It's always good to have some load simulators in the hands of developers to load test their development artifacts, so that they can figure out the probable bottleneck areas even before the solution reaches APT environment. Again it's better if such tool is a part of the development IDE itself or if it's a freeware, as business stakeholders won't entertain a separate licensed tool for developers. One such tool of the same flavor for SSAS has newly made entry on Codeplex, and it's known as AS Performance Workbench. It can be seen as a micro version of load runner, but if the tool provides what it claims on the project home page, it would be a brilliant tool to have. I would be more happy if this tool is fused with BIDSHelper. I suggest to download and checkout this tool, and you might find another nuke to store in your arsenal of developer tools.

Friday, April 01, 2011

How to increase your visibility as a Business Intelligence professional

I'm reading: How to increase your visibility as a Business Intelligence professionalTweet this !Firstly before I start sharing my views, I would like to emphasize on one point that I am not a career guidance guru / expert. I share my views and thoughts based on my experience, and you should always use your wisdom to steer you career in the right direction.

Today almost every other IT professional who does not work as a free lancer / trainer, works in one or another, small or big, core IT or IT services of a core organization. Any organization has employees in hundreds to thousands, and when performance of an individual is measured, it's always in terms of percentile and not percentage. So this means you have to compete against all your peers, and you would be rated against the same. Today the industry has become so competitive that employees are not recognized as professionals but just technology resources and many a times it happens that people are treated as commodities. When you approach operations and/or resource management, at one or other stage of your career, you would come across a stage where you would hear that "you do not have a good visibility in the service line / business vertical to which you belong".

Another challenge is that when you change you job / business unit / project, you head on to a new world where almost anyone won't recognize you / your technical capability / your career achievements. The only thing that you would have as a record of everything is a piece of paper, called "Resume". The reason is again, you do not have enough visibility as a professional outside your project / business unit / IT industry. So how to deal with this issue ?

1) Go public: Do not waste your efforts in making your immediate supervisor happy. In my viewpoint, this is a very short term vision. He / She is not going to be your supervisor for life. Invest into efforts that would survive over the period of time. For example instead of creating an copy-pasted document from MSDN to contribute a tutorial in your CoE, invest time in creating an original and useful project on Codeplex.

2) Build your network: Attend tech-eds, user group meetings, webinars etc where you can connect with professionals across the industry. Do not waste time entertaining influential personnel in your organization. This is again short term vision, unless and until you are going to work with the same set of people for your life.

3) Establish your reputation globally: There are open discussion forums like MSDN Forums, Community websites like SQL Server Central and others, development platforms like Codeplex. Make the best use of these platforms to establish your reputation as an expert professional. When you raise your reputation, you raise all the means to raise the height of your visibility.

4) Open a communication channel: Blog is one of the best ways to open a communication channel to the external world. Whether you change your technical area of practice / your job / your base location, people can always reach you through your blog / website and the same acts as a log book of your evolution as a thought leader in your area of expertise.

Even if you follow the above points just in your local city, there is a very high probability that you would stand out from the rest of the queue. The summary of this long theory is that, don't think like a frog in a well, and Go Global to raise the height of your visibility to the best of your capabilities. Believe me, once your reputation reaches a certain level, people would come to you and you won't have to run after people to keep on advertising yourself. Keep in view that reputation gets built over time, it's not an overnight delivery. So start the long term plan for your career right now. Feel free to share your opinions on my viewpoint.

Subscribe to:

Comments (Atom)

Article

Article